How to Evaluate AI Tools When You're Not Technical: A Framework for Business Leaders

Business leaders don't need to understand neural networks to evaluate AI tools effectively. Learn a practical framework for assessing AI solutions based on business outcomes, not technical specifications.

Article Content

A marketing director received proposals from three AI content generation vendors, each claiming superior "transformer architectures," "fine-tuned models," and "advanced natural language processing." She had no idea what these terms meant or how to compare them. So she did what most non-technical leaders do: she asked her team to run a pilot project with real content, measured the results against clear business metrics, and selected the tool that delivered the best outcomes. The technical specifications turned out to be irrelevant—what mattered was whether the tool helped her team produce better content faster.

This practical, outcome-focused approach to AI tool evaluation works better than trying to understand technical details that don't translate to business value. Non-technical leaders don't need to become AI experts to make good AI tool decisions—they need a framework for evaluating tools based on business outcomes, user experience, and organizational fit.

Start With Business Outcomes, Not Technical Features

AI vendors love to discuss their technical capabilities: model architectures, training data size, accuracy metrics, and processing speeds. These specifications might matter to data scientists, but they're meaningless to business leaders trying to solve practical problems. A customer service director doesn't care whether a chatbot uses GPT-4 or Claude—she cares whether it resolves customer issues effectively, reduces support costs, and improves satisfaction scores.

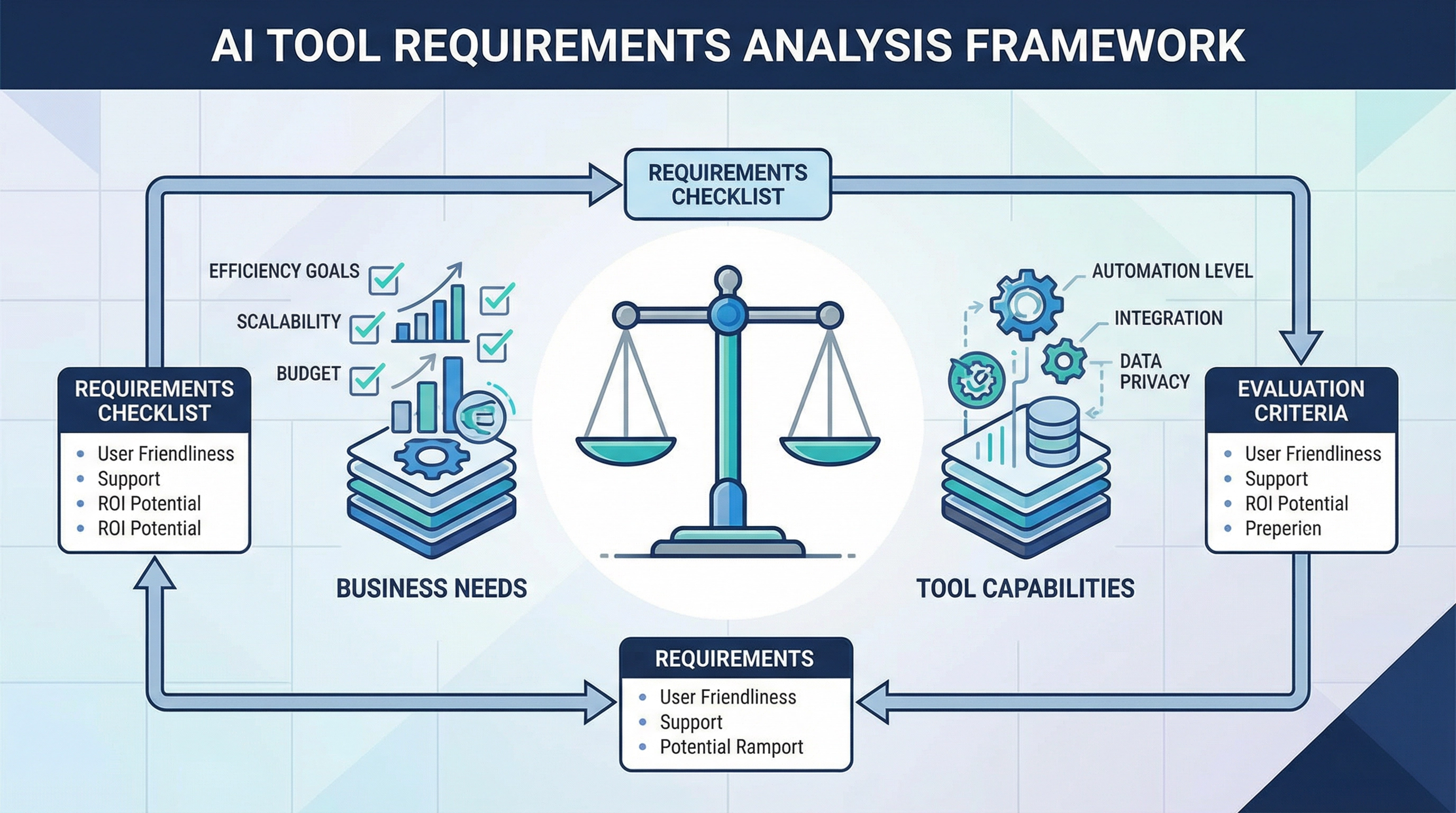

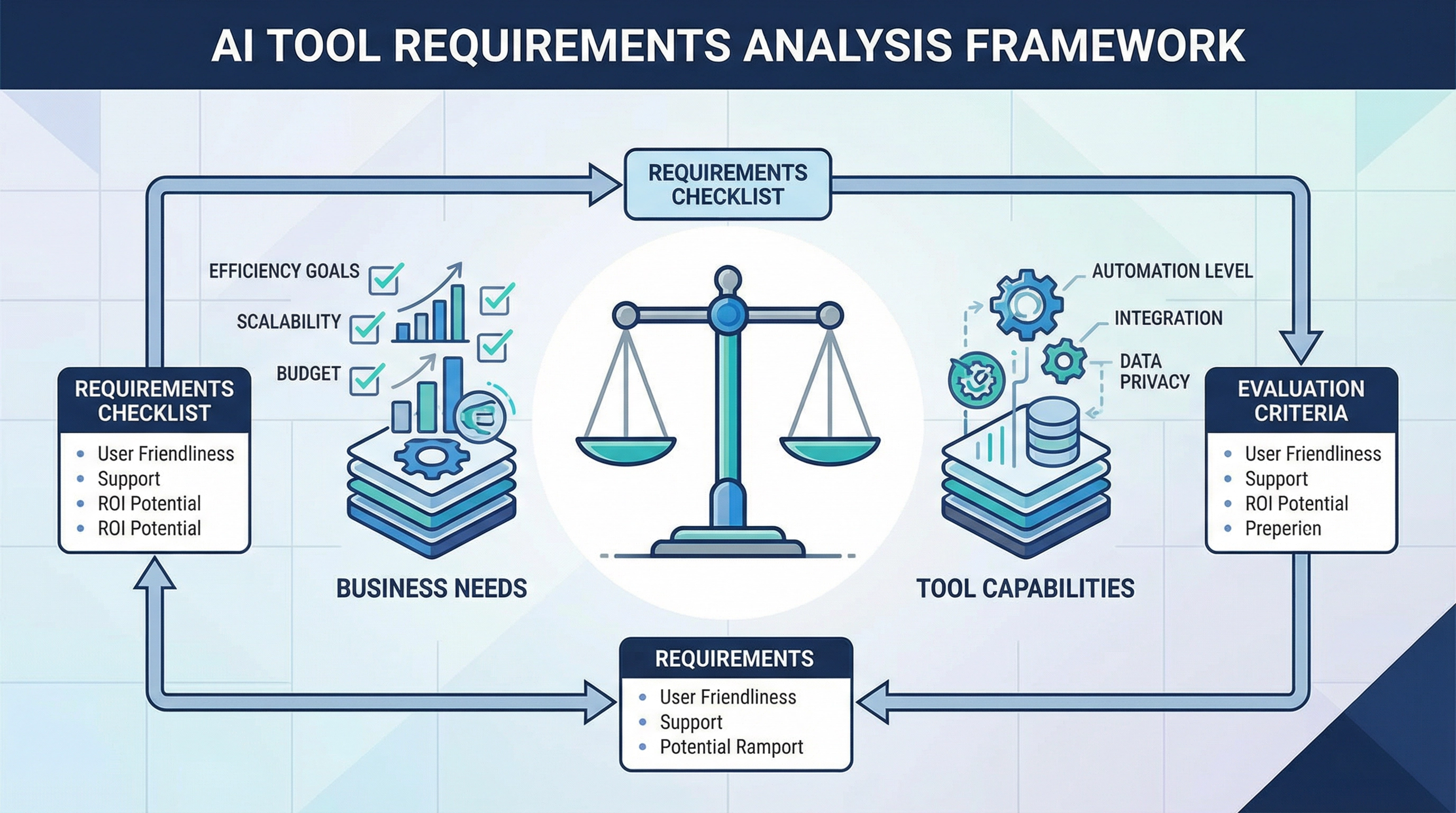

Define your success criteria in business terms before evaluating any AI tools. What specific problem are you trying to solve? What outcomes would indicate success? How will you measure improvement? A sales team evaluating AI lead scoring tools should focus on metrics like conversion rates, sales cycle length, and revenue per lead—not on the tool's machine learning algorithms or data processing capabilities.

Create a scorecard that translates technical capabilities into business outcomes. Instead of "advanced natural language processing," ask "Can this tool understand our customers' questions accurately enough to provide helpful responses?" Instead of "99.9% uptime SLA," ask "Will this tool be available when our team needs it, and what happens if it's not?" This translation forces vendors to explain their capabilities in terms that matter to your business rather than hiding behind technical jargon.

Test AI tools against real work scenarios, not vendor demos. Vendors design demos to showcase their tools' strengths while avoiding weaknesses. A real-world pilot with your actual data, users, and workflows will reveal whether the tool delivers value in your specific context. A content team should test an AI writing tool with their actual content types, brand guidelines, and quality standards—not with the generic examples vendors provide.

Evaluate User Experience, Not Just Capabilities

An AI tool might have impressive capabilities, but if your team won't use it, those capabilities don't matter. A sales team abandoned an AI forecasting tool that provided highly accurate predictions because the interface was confusing, the workflow didn't match their process, and generating forecasts took longer than their previous manual approach. The tool worked—it just didn't work for them.

Involve actual users in AI tool evaluation from the beginning. Don't rely on executive demos or IT department assessments—get the tool into the hands of the people who will use it daily. A customer service team evaluating AI chatbots should have frontline agents test the tools, not just managers reviewing vendor presentations. Frontline users will quickly identify usability issues, workflow mismatches, and practical limitations that aren't apparent in controlled demos.

Pay attention to adoption friction during pilot projects. How long does it take new users to become productive with the tool? What training and support do they need? What workarounds do they develop to avoid using the tool? High adoption friction indicates that the tool doesn't fit your team's working patterns, regardless of its technical capabilities. A tool that requires extensive training, constant troubleshooting, or frequent workarounds will never deliver its promised value.

Evaluate whether the AI tool enhances or disrupts your team's existing workflow. The best AI tools feel like natural extensions of how people already work—they reduce friction rather than creating it. A design team might love an AI image generator that integrates with their existing design software but reject a more powerful tool that requires them to switch between multiple applications. Workflow fit matters more than feature lists.

Assess Organizational Fit Beyond the Tool Itself

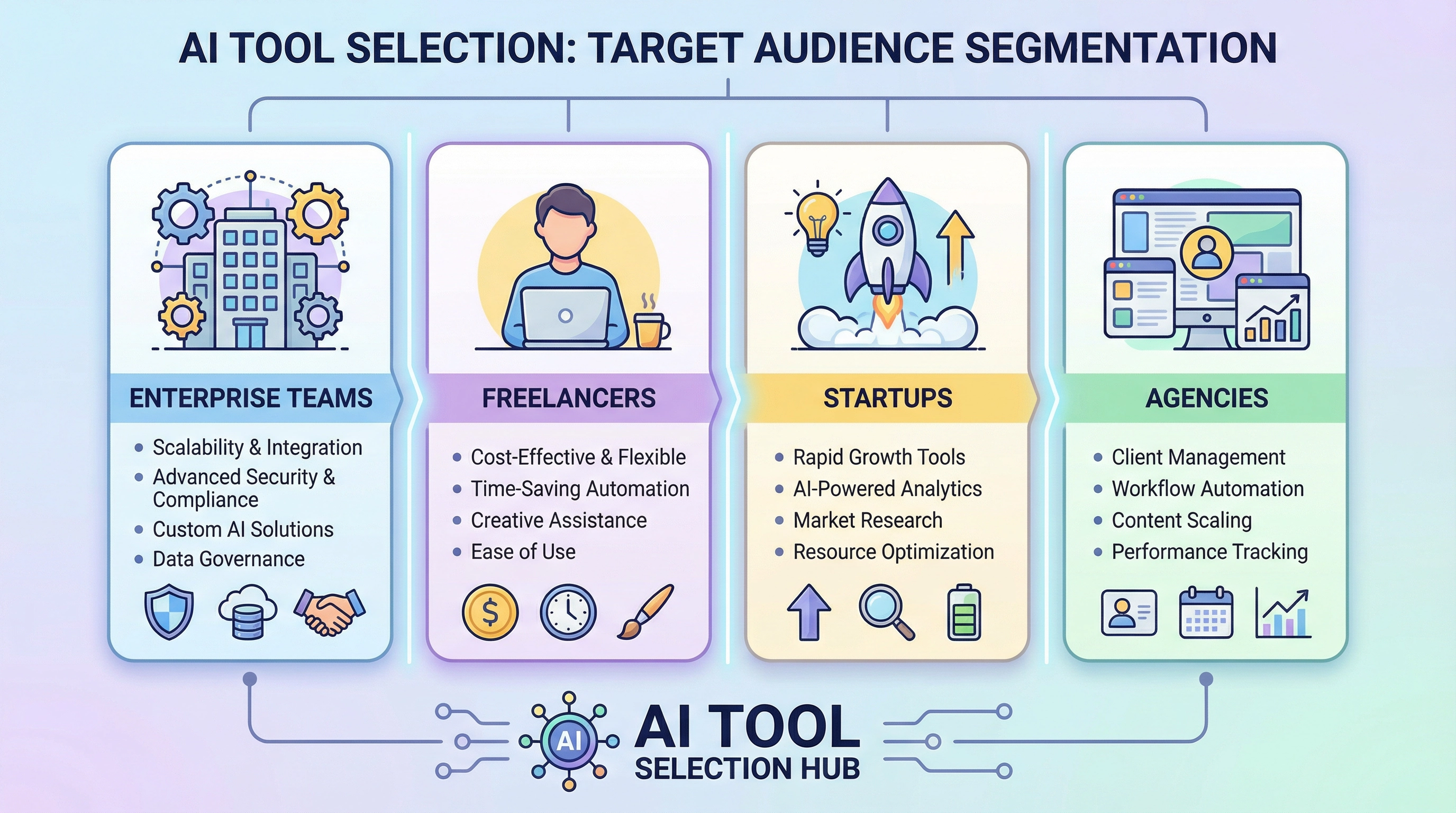

AI tool success depends on more than the tool's capabilities—it depends on whether your organization can effectively implement, support, and evolve with the tool. A small business with limited IT resources shouldn't adopt an AI tool that requires extensive technical configuration, ongoing maintenance, and specialized expertise. A large enterprise with strict security requirements shouldn't adopt an AI tool that can't meet their compliance and governance standards.

Evaluate your organization's readiness for AI tool adoption. Do you have the technical skills needed to implement and maintain the tool? Can your IT infrastructure support it? Do your security and compliance requirements allow it? Will your team embrace it or resist it? These organizational factors often determine AI tool success more than the tool's technical capabilities.

Consider the vendor relationship as carefully as the tool itself. You're not just buying software—you're entering a partnership with a vendor who will support your implementation, address issues, and evolve the tool over time. Evaluate the vendor's stability, support quality, customer references, and product roadmap. A technically superior tool from an unstable vendor or one with poor support creates more risk than a slightly less capable tool from a reliable partner.

Assess the total cost of ownership, not just the subscription price. Include implementation costs, training expenses, ongoing support needs, integration work, and the productivity impact during the learning curve. A tool with a $500 monthly subscription might cost $20,000 in the first year when you include implementation, training, and lost productivity. A more expensive tool with better onboarding and support might actually cost less overall.

Use a Structured Evaluation Process

Ad hoc AI tool evaluation leads to inconsistent decisions, missed requirements, and tools that don't deliver expected value. A structured process ensures you evaluate all relevant factors and make defensible decisions. Start by documenting your requirements: what problem you're solving, what success looks like, who will use the tool, and what constraints you're working within.

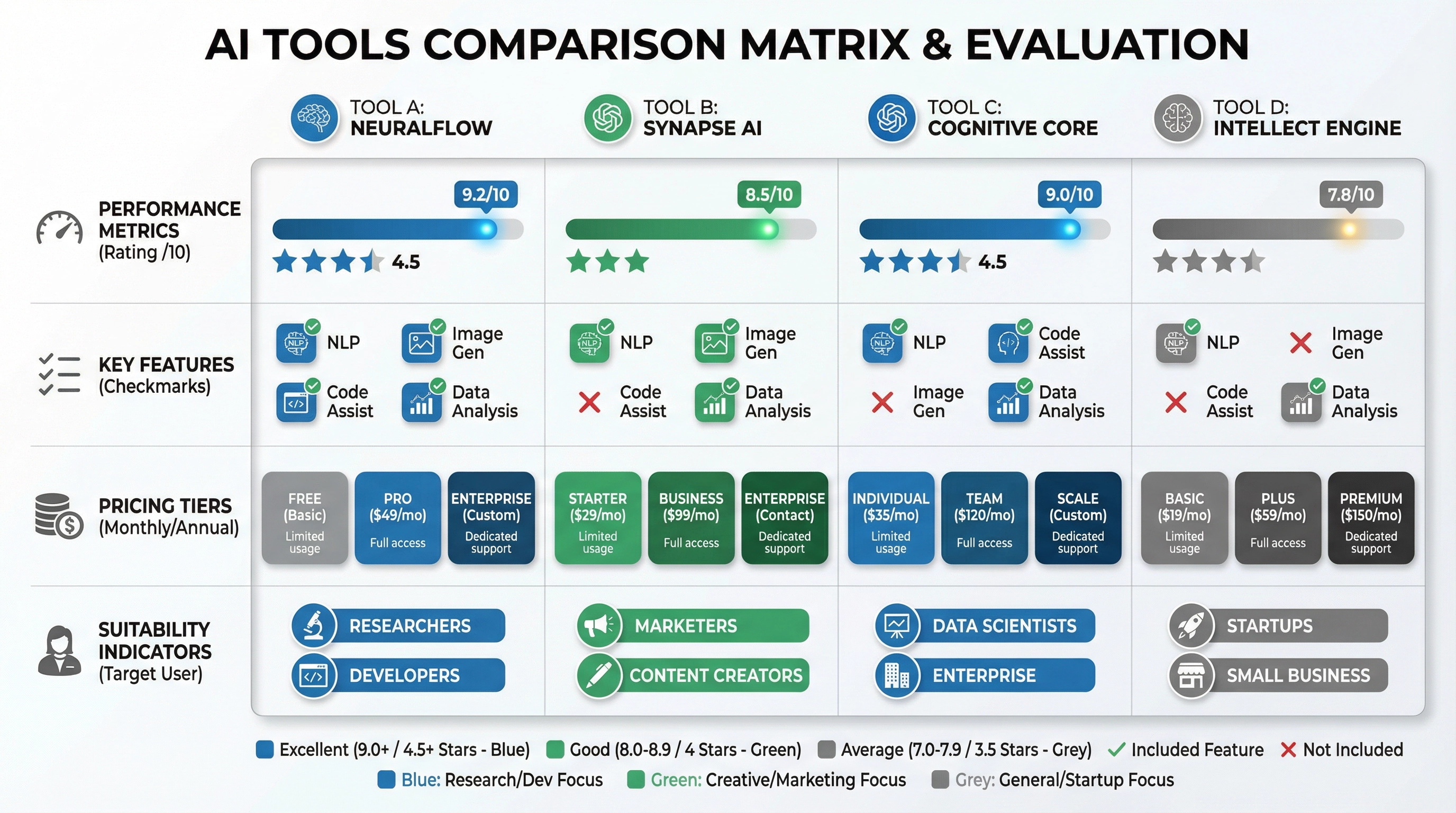

Create an evaluation rubric that scores tools across multiple dimensions: business outcomes, user experience, organizational fit, cost, vendor relationship, and risk factors. Weight these dimensions based on what matters most to your situation. A startup might weight cost and ease of use heavily. An enterprise might prioritize security, compliance, and vendor stability. Your rubric should reflect your organization's priorities, not generic best practices.

Run structured pilot projects with shortlisted tools. Define the pilot scope, duration, success metrics, and decision criteria upfront. Use the same test scenarios across all tools so you can compare results fairly. Involve representative users and measure both quantitative metrics (time saved, accuracy improved, costs reduced) and qualitative feedback (user satisfaction, workflow fit, support quality).

Document your evaluation process and decision rationale. When you revisit the decision six months later—either to expand usage or reconsider the choice—you'll want to remember what factors drove your decision and what alternatives you considered. This documentation also helps you refine your evaluation process for future AI tool decisions.

Common Evaluation Mistakes to Avoid

Don't let vendor hype drive your decision. AI vendors excel at creating excitement about their technology's potential while downplaying limitations and implementation challenges. Stay focused on your specific requirements and how the tool performs against them, not on impressive demos or visionary promises about AI's future.

Don't assume that popular tools will work for you. A tool that works perfectly for other companies might not fit your specific needs, workflows, or constraints. Evaluate tools based on your requirements, not on market share or brand recognition. The most popular AI writing tool might be wrong for a team that needs specialized industry knowledge or strict compliance controls.

Don't skip the pilot project. Vendor demos and reference calls provide useful information, but they can't replace hands-on testing with your actual data, users, and workflows. A two-week pilot project will reveal more about whether a tool fits your needs than months of vendor presentations and reference checks.

Don't evaluate tools in isolation. Consider how an AI tool fits into your broader technology ecosystem, workflow patterns, and organizational capabilities. A tool that works well standalone might create problems when integrated with your existing systems. A tool that requires capabilities your organization doesn't have will fail regardless of its technical merits.

Making Confident AI Tool Decisions

Non-technical leaders can make excellent AI tool decisions by focusing on business outcomes, user experience, and organizational fit rather than trying to understand technical specifications. Define success in business terms, involve actual users in evaluation, assess organizational readiness, and use a structured process that ensures you consider all relevant factors.

The marketing director who evaluated AI content tools based on real content performance rather than technical specifications made a better decision than if she'd tried to understand transformer architectures and natural language processing algorithms. She focused on what mattered—whether the tool helped her team produce better content faster—and let the technical details remain irrelevant.

This practical, outcome-focused approach works across all AI tool categories and business contexts. You don't need to become an AI expert to evaluate AI tools effectively. You need a clear understanding of your requirements, a structured evaluation process, and the discipline to focus on business outcomes rather than technical hype.

For detailed guidance on evaluating specific types of AI tools, explore our comprehensive tool reviews or check our side-by-side comparisons that highlight practical differences between popular options.